Neural network architecture optimization is difficult but important task. Because of problems with understanding the neural models, it is almost impossible to propose optimal architecture of the neural network for certain task (usually, there are only general remarks about applying certain NN e.g. for classification or clustering). The process of optimization of this architecture is usually performed by an expert, involves many steps of complete training of different networks and is a very time-consuming job. However, there exist several classical techniques aimed at automatic optimization of neural network architecture based on mathematical features of neural networks. There are two approaches to solving this problem:

Evolutionary algorithms being an universal optimization algorithms are of course used also in the mentioned problem of neural network architecture optimiation. General structure of such an algorithm is as follows:

EMAS being universal optimization technique easily adapts to the problem of neural network optimization. The networks are entrusted to agents, and every agent performs the process of training of its network. During this process, the outcome of the network may be evaluated and based on this values, energy of the agent is exchanged amongh its neighbors.

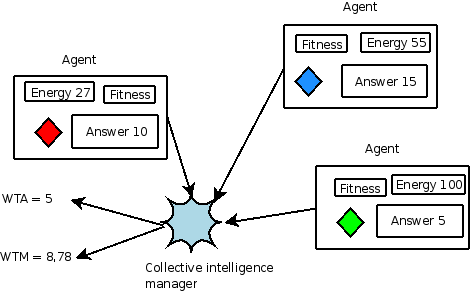

In order to correctly evaluate the outcome (in the means of e.g. classification or prediction accuracy) of an evolutionary system, several techniques for managing such "collective intelligence" were presented. Neural-Agent system may be perceived as collective intelligent, because it consists the agents, every agent contains the network and every agent becomes a kind of expert - it may present the answer to the given problem. In order to compute the answer to the problem for the whole system (containing many experts) following techniques may be used:

Neural-based predicting EMAS merges the collective intelligence managing approach with EMAS idea. One more thing is the handling of the analyzed data, the subsequent values of the predicted time series is presented on the input of every agent in the system as soon as it becomes available. After computing the prediction by every agent, the answer of the whole system is computed based on PREMONN or simple WTA approach, and it is returned to the user. The error of prediction becomes main component of the fitness computation. More detailed description of this idea may be found in ByrDorNaw:IAI2002, ByrDor:ICCS2007.